AI Robots and The Divine Council

In the original Jurassic Park film, where prehistoric titans are brought back to life and wreak havoc on an island, Dr. Ian Malcom famously says, “Yeah, yeah, but your scientists were so preoccupied with whether or not they could, that they didn’t stop to think if they should.” This question seems to be on a lot of people’s minds concerning AI these days.

Should we keep developing and using AI and robotics?

Guess what…we just can’t help ourselves. If humans can create something, we are going to take it to its limits. Progress, improvement, evolution, and technological development are in our blood. As Eve Poole, referring to AI, states, in Robot Souls, “Not to do so, when we increasingly have the technology, is to voluntarily embrace our limitations.”[1] If creating the atomic bomb, which could wipe out the world in the wrong hands, did not stop us, risking machines taking over the world would not either.

Creation is in our DNA. It is in “our code”. The Judeo-Christian faith tradition believes humans are made in God’s image. The first thing ancient Israel records God doing in Genesis is creating.

Robot Souls was much more fascinating than I anticipated. For a short read, it covered a lot of deep issues surrounding big philosophical concepts like ontology, epistemology, and existentialism. However, it surprisingly got my theological wheels turning. AI has put humanity into a unique situation, especially if we could somehow code souls into robots. As Poole was exploring rules, boundaries, and laws for both humans and AI to coexist, I thought, “We are attempting to think like a divine creator as we make something that may, or may not, have some kind of consciousness.” This special time in history is forcing us to reflect on and examine who and what we are as humans and who we want to be as we create this complex, and possibly sentient, technology.

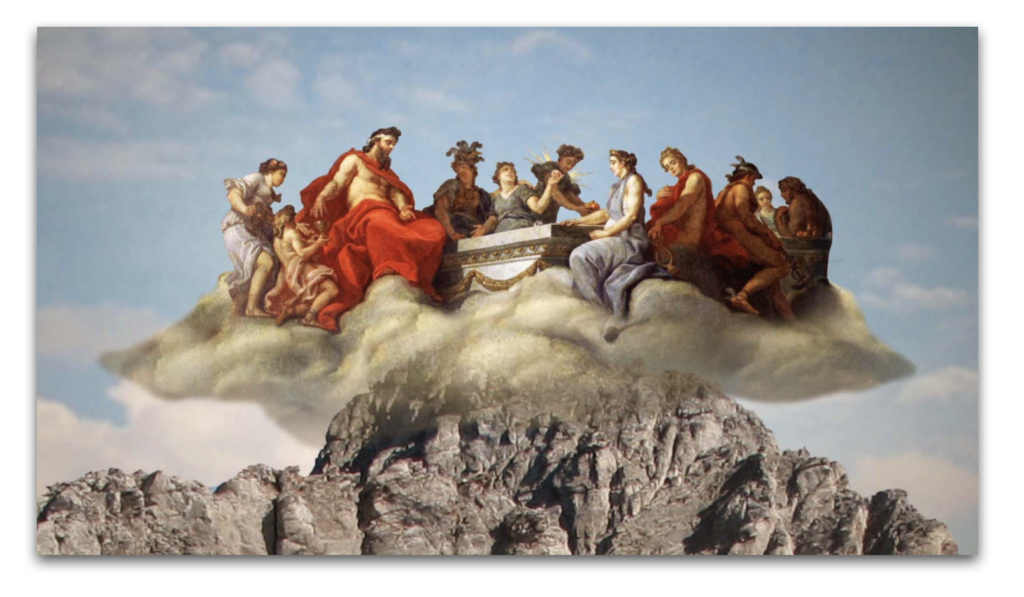

Responsibility was the main theological and moral issue I found myself thinking about while reading. Poole says, “Therefore we are morally responsible for them…This is not because of what they are due, but because of who we are…It dehumanizes us if we abuse them.”[2] The fact that Poole is already referring to AI robots as conscious beings is already a bit eerie, but challenging us to be loving and benevolent creators, as we develop this technology seems like we are sitting around a heavenly table as a divine council.

Let’s think about this. Part of “coding a soul” is allowing mistakes, free will, subjective meaning, emotions, uncertainty, and storytelling. Poole insinuates that higher degrees of free will should be the final things coded into robots once certain levels of maturity and choice-making have reached a safe threshold. That’s right, we are thinking about robots learning and maturing like humans.

Hang with me here. I could not help but think about Paul, when he says in 2 Corinthians 2:3 “You show that you are a letter from Christ, the result of our ministry, written not with ink but with the Spirit of the living God, not on tablets of stone but on tablets of human hearts.” The New Testament depicts a new Spirit emerging in the early church that writes the law on their hearts. Tom Holland in his book Dominion, argues that this concept of conscience is one of the most distinctive characteristics of the Judeo-Christian faith developed by Paul. [3] He believes to be guided by a certain type of moral conscience is to be Christian.

Over the years I’ve read several theories and theologians who have noticed a developmental process in Israel’s history. Early on, like children, the law, which was concrete and rigid, was necessary for Israel’s collective stage of development. However, once a threshold was reached, similar to what Poole says about AI robots, more rope could be given, and the rules could be relaxed. Similar to what parents do with their children as they develop as Erik Erikson, the Harvard psychiatrist, lays out in his theories on human development.[4] Eve explicitly says, “This theme of taking responsibility for ‘family members’ is why many have argued that the metaphor of parenting is the best way to frame our relationships with AI.”[5] What a wild thought right? Humanity has put itself in a situation where we are forced to entertain the idea and wrestle with being wise and benevolent parents of something other than biological beings.

As stated earlier, this whole AI scenario has challenged and forced me to think through my faith tradition, Christian theology, and human nature. I mentioned Timothy Keller’s book, the Prodigal God, last semester which argues that the Gospel has way more to do with who God is as a loving parent than our mistakes or even good works as human children with free will, who constantly search for meaning, and navigate their emotions and brain chemistry. I believe much of the beauty of our faith tradition has been a gradual revelation of who God is and who we are as children of God. We are still standing in this tradition of discovery by thinking through our relationship to AI robots in the 21st century, something unfathomable in Moses’, David’s, or Paul’s day. Could we as humans, as we think about how to be loving, wise, and benevolent ‘parents’ to robots, surpass the love, wisdom, and heart of our creator? I think not. This AI conversation not only causes me to think about who we are as we create, develop, and especially use this technology but also prompts me to reflect on God’s nature as the creator and parent to a global community created or ‘coded’ with an extremely complex nature.

[1] Poole, Eve. Robot Souls: Programming in Humanity. (New York: CRC Press, Taylor & Francis Group, 2024), 29.

[2] Poole, Robot Souls, 16.

[3] Holland, Tom. Dominion: The Making of the Western Mind, (London: ABACUS, 2020), 77.

[4] Erikson, Erik H., Robert Coles, and Erik H. Erikson. The Erik Erikson Reader, (New York: W.W. Norton, 2001), 191.

[5] Poole, Robot Souls, 113.

6 responses to “AI Robots and The Divine Council”

Leave a Reply

You must be logged in to post a comment.

I love your mind Adam. Seriously.

Right off the bat you captured me with the “could” vs. “should” argument.

I Corinthians 6:12 reminds us that “all things are permissible but not all things are beneficial.” Sure we could, but, well, should we?

Thanks my friend, yeah it just seems like we can’t help ourselves, huh? If we can make or create it, we are going to do it. The ethical and practical questions we have to navigate in the 21st century is crazy, but STILL no flying cars. What’s up with that!?!

Yes, I agree with John, I think the could vs. should debate is a great place to start the AI conversation. And we have historical precedent for humanity choosing to limit the use of technology when it becomes destructive, at least to some extent (the non-proliferation treaty is what comes to mind). But once again, we come back to the question that we’ve faced regarding other issues: Who gets to decide?

Exactly, that’s why Poole’s book is intriguing. I would love to think everyone involved is asking the types of questions she poses. One can only hope. Its an exciting and scary time. Not sure if you have seen AI robots interviewed online. It is both inspiring and eerie.

Ah… human mistakes, it’s late at night, I’m behind in my other homework and here I sit trying to read and respond to this topic and I screwed up…I had a lovely reply to your blog and then it got erased because I was not logged in. Where is AI to save what I wrote? JK but it is the main gist of what your post made me think of, where does suffering fit in all of this. Part of resilience, mystery, soul is that we are not protected from suffering. In medicine I’ve watched as more and more advancements have come to help people move towards cures, but we fail to see the unintended consequence is suffering. We seem to be forgetting to ask if we should do something instead of we could do this. In fact that is what most doctors say in order to help patients feel like they have a choice and control, which they do, but we’ve been trained to trust science and advancements so much that we fail to stop and think! What do you think? Is suffering a key component to learning and resilience and therefor AI will never fully be like us?

Ahhh I’ve had that happen myself and it’s incredibly frustrating! That’s a really good point Jana, suffering is part of the human experience and how do we really “code” pain into AI and robotics? Pleasure as well, Poole mentioned the inability of even being able to replicate “smelling a strawberry”. Even the simplest of things we take for granted everyday is complex and stumps the greatest minds. Donald Hoffman, from MIT, talks about this current predicament with simple things like “tasting chocolate”. Good catch!!